How could you tell whether a computer or robot is conscious and therefore whether it might have rights and responsibilities?

One of the most famous answers to the question "can a computer be conscious?" came from the same genius who built the first working computer,  Alan Turing.

Alan Turing.

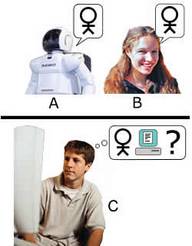

The 'Turing Test' imagines three people A, B and C, sat in separate rooms. They can only talk to each other through typing messages via a keyboard and screen. One of these people, A, is replaced by a computer. The test looks to see whether person C can reliably tell which of the others is human and which is the computer. If, after a lot of asking questions the tester can't tell the difference then we can assume that the computer is conscious.

The 'Turing Test' imagines three people A, B and C, sat in separate rooms. They can only talk to each other through typing messages via a keyboard and screen. One of these people, A, is replaced by a computer. The test looks to see whether person C can reliably tell which of the others is human and which is the computer. If, after a lot of asking questions the tester can't tell the difference then we can assume that the computer is conscious.

We usually just assume anyway that other people are actually conscious. So if a computer is so convincing that you can’t tell the difference between it and a real person, should we just extend the same courtesy to the computer and just assume that it too is conscious?

Searle's Chinese Room experiment

Searle's Chinese Room experiment

What's your opinion?

Average rating

Not yet rated